What DevOps for Data Really Means

DevOps for Data is not about fixing pipelines or deploying models. \n It’s about designing systems that remain reliable, secure, and predictable as data and ML teams grow. Most teams feel the pain long before they understand the role.

1. Why This Article Exists

Most teams start using the words DevOps, DataOps, and MLOps long before they agree on what those roles actually mean.

In early‑stage startups, this ambiguity often feels convenient. One engineer trains models, deploys pipelines, manages permissions, and fixes production issues. Fewer handoffs, faster decisions, less process.

The problem is that this setup doesn’t scale.

As data volumes grow, more stakeholders rely on models, and production incidents become more frequent, teams discover that the issue is not tooling or individual skill. The issue is that critical responsibilities were never explicitly owned by anyone.

This article exists to clarify one role that is often misunderstood or introduced too late: DevOps for Data.

It is written for CTOs and technical founders building their first data or ML platform, as well as for ML engineers and data scientists who increasingly find themselves responsible for infrastructure decisions. The goal is not to introduce another label, but to explain why role clarity becomes a prerequisite for reliability and sustainable growth.

2. Who Is Who: Data Engineer, ML Engineer, DevOps for Data

In healthy data teams, different roles focus on fundamentally different problems.

Data engineers are primarily concerned with how data is ingested, transformed, and stored. Their work shapes the analytical backbone of the company: pipelines, schemas, and data models that downstream systems depend on.

ML engineers focus on models themselves — training, evaluation, feature logic, and inference. Their success is measured by model quality, iteration speed, and adaptability to changing data.

DevOps for Data operates in a different dimension altogether. This role is responsible for how safely and predictably the system operates over time: CI/CD, environment separation, access control, observability, and operational guardrails.

The most important distinction is this:

Problems emerge when these boundaries blur. Data engineers end up making infrastructure decisions without proper abstractions. ML engineers deploy models without reproducibility guarantees. DevOps engineers are pulled into debugging logic they didn’t design. None of these failures are about competence — they are about unclear ownership.

3. Trade‑offs by Role

Each role in a data team brings real strengths — and natural limits. Systems usually break not because a role is weak, but because teams expect one role to absorb all trade‑offs at once.

Data engineers bring deep understanding of business logic and data semantics, enabling fast iteration on pipelines and schemas. However, when they are forced to manage infrastructure implicitly, they often become manual operators of fragile systems rather than designers of scalable ones.

ML engineers excel at experimentation and tight feedback loops between data and model performance. But when production concerns are treated as secondary, reproducibility and operational risk quietly accumulate.

DevOps for Data provides stability, security, and clear operational ownership. The downside is that its value is not immediately visible to the business — which is why this role is often introduced only after repeated incidents.

A useful summary is simple: \n systems fail when responsibilities are misaligned, not when people lack skill.

4. Do You Actually Need DevOps for Data?

Teams rarely decide upfront that they need DevOps for Data. Instead, they notice a pattern of uncomfortable symptoms that slowly become normal.

Below is a practical checklist you can use to assess your current state:

| Symptom | What It Signals | |----|----| | Models are deployed manually | No reproducibility | | One script controls most workflows | No isolation or versioning | | Everyone has access to all datasets | Missing security boundaries | | Nobody knows which model is in production | No tracking or lineage | | Metrics drop without a clear explanation | No monitoring or alerts | | Migrations feel risky and stressful | No infrastructure automation |

If two or moreof these apply, the issue is no longer operational friction. \n It is an architectural problem — even if it still looks like a process issue on the surface.

5. Common Startup Mistakes

Early‑stage teams tend to repeat the same mistakes, not because they lack experience, but because growth outpaces structure.

Roles remain blurred for too long, making reliability everyone’s responsibility — and therefore nobody’s. CI/CD exists for application code, but not for data pipelines or models. Development and production environments are not clearly separated, allowing experiments to leak into critical systems. Infrastructure and jobs are migrated manually, introducing subtle inconsistencies that slowly erode trust.

These failures are often blamed on missing tools. In reality, they come from postponed decisions about ownership and operational boundaries.

6. What DevOps for Data Actually Looks Like

A mature DevOps for Data setup is usually simpler than people expect. It does not require an enterprise platform or a large team. What it does require is consistency.

Infrastructure is defined as code so environments can be reproduced. Data and model changes go through CI/CD rather than manual deployment. Experiments, artifacts, and configurations are versioned and traceable. Access to sensitive data is restricted by default. Pipelines and models are observable, not opaque.

The unifying principle is straightforward:

7. Final Takeaways

DevOps for Data is often misunderstood as a supporting function — someone who “keeps things running.” In reality, it is a leverage role that determines whether growth is predictable or fragile.

Teams that clarify this role early spend less time firefighting later. ML and data engineers stay focused on their core work instead of compensating for missing infrastructure decisions. Reliability becomes a property of the system, not a heroic effort by individuals.

Ignoring DevOps for Data doesn’t remove the work. \n It simply hides it — until the system becomes too complex to reason about safel

\

Ayrıca Şunları da Beğenebilirsiniz

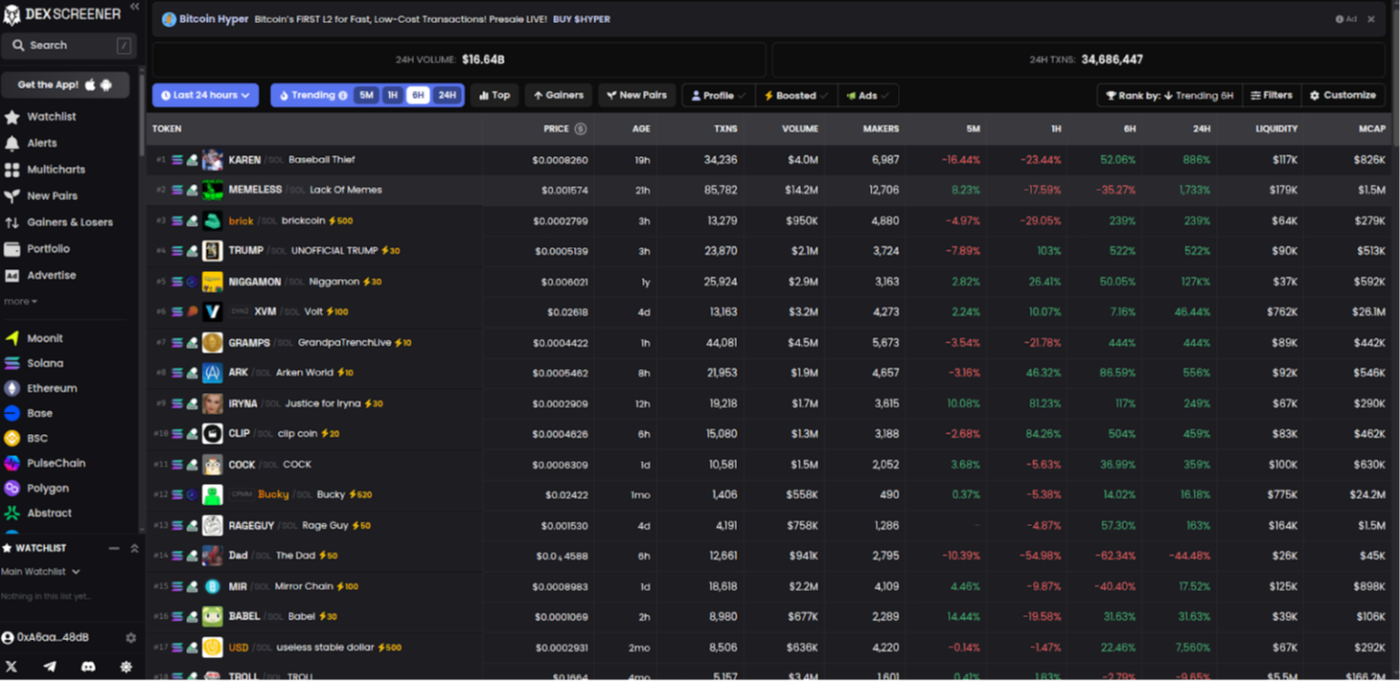

Building a DEXScreener Clone: A Step-by-Step Guide

China Blocks Nvidia’s RTX Pro 6000D as Local Chips Rise