AI Implementation Cost Saving Strategies

When implementing artificial intelligence (AI) across an enterprise, there are several cost drivers to consider.

1. Data Acquisition & Management

When managing data acquisition, areas to review include data labelling and annotation, along with other data-specific characteristics such as data quality, data volume and variety, data security, and data governance for AI.

For supervised learning, data labelling and annotation are labor-intensive and often outsourced processes. Poor labelling leads to poor models and wasted training cycles. This is far more prevalent and impactful in AI than most traditional software.

The saying “garbage in, garbage out” is amplified in AI, especially when it comes to data quality. Low-quality, biased, or insufficient data directly translates to:

- Increased Training Costs: Models need more data and longer training times to compensate for noise.

- Poor Model Performance: Leading to re-training (more cost), inaccurate outputs, and potential business losses.

- Higher Debugging & Iteration Costs: Diagnosing why an AI model performs poorly often leads back to data issues.

- Bias Mitigation Expenses: Addressing algorithmic bias due to biased data can require significant data augmentation, relabelling, or specialized model fairness techniques.

AI often requires massive, diverse datasets. This drives up storage costs, data transfer (egress) costs, and the complexity of data pipelines significantly more than traditional applications. From an ethical and legal perspective, proving the origin and quality of data used to train AI models is becoming a regulatory and ethical requirement, adding a new layer of governance costs.

Data security in the context of AI needs to consider restricting information to authorized users in addition to the significantly more complex issue of shielding data from AI models that should not have access. This can be required for regulatory and ethical reasons, and IP protection.

2. Infrastructure & Computational Resources

When sourcing various infrastructure and computational assets for AI implementation, several factors could affect costs, such as specialized hardware requirements, compute-intensive training costs, and recurring inference costs.

- Specialized Hardware (GPUs/TPUs) Dependence: Unlike most traditional software that runs on standard CPUs, AI (especially deep learning) heavily relies on expensive, powerful GPUs or custom AI chips (like AWS Inferentia/Trainium or Google TPUs). This is a primary cost differentiator.

- High Training Costs (Compute-Intensive):

- Duration of Training: Training large AI models can take days, weeks, or even months, consuming vast amounts of compute resources continuously.

- Experimentation & Iteration: Data scientists constantly train and retrain models with different parameters and datasets, leading to significant, often unpredictable, compute consumption.

- Large Language Models (LLMs): Training and fine-tuning foundation models for generative AI are astronomically expensive, requiring massive, distributed computing power.

- Inference Costs (Recurring & Scalable): Running AI models in production (inference) incurs continuous, per-request costs that scale directly with usage, which often exceed training costs over the lifecycle of an application. This includes CPU/GPU usage for predictions and API calls to third-party AI services.

3. AI Model Development & Deployment

From API costs to drift management and RAG system investments, AI model development and deployment comes with its own list of cost considerations.

- Open-Source vs. Proprietary AI Models/APIs:

- API Costs: Using pre-trained models via APIs (e.g., OpenAI, Google AI) incurs per-token or per-call fees that scale with usage. Benchmarking different vendors is crucial.

- Open-Source Hidden Costs: While seemingly “free,” open-source models still require significant infrastructure, specialized talent to deploy and maintain, and ongoing fine-tuning costs.

- Model Retraining & Drift Management: AI models degrade over time as real-world data changes (data drift, concept drift). Budgeting for frequent retraining and continuous monitoring for model performance degradation is a unique AI operational cost.

- Hyperparameter Tuning: The iterative process of finding optimal model configurations (hyperparameters) requires many training runs, directly impacting compute costs.

- RAG (Retrieval Augmented Generation) System Costs: For generative AI, building and maintaining RAG systems involve costs for:

- Vector Database Storage and Operations: Storing and querying embeddings.

- Embedding Generation: The computational cost of creating vector embeddings from documents.

- Frequent Re-indexing/Updates: As knowledge bases change.

4. The “Human-in-the-Loop” & Operational Costs

There is also ongoing operational labor required after a model is deployed.

- Expert Oversight: High-stakes AI (e.g., medical, legal, or financial) requires subject matter experts (SMEs) to review AI outputs. This “Human-in-the-Loop” cost is a recurring operational expense.

- Prompt Engineering & Tuning: For Generative AI, there is a continuous cost in training staff to write effective prompts and updating those prompts as models evolve.

- AI Literacy Training: The cost of upskilling the broader workforce to use these tools effectively is often ignored but vital for ROI.

Cost Saving Strategies

Cloud providers increasingly provide industry-specific AI models which are pre-trained with vertical specific content and context. Thus, only organization-specific data must be labeled, reducing efforts significantly. A clear advantage of cloud-based AI usage.

Also, the optimization of AI-specific workloads reduces compute use while avoiding unnecessary costs. Examples include:

- Financial Engineering (FinOps for AI) Broader cloud financial management strategies play an important role for AI compute power:

- Reserved Instances/Savings Plans: Committing to compute power for 1–3 years can save up to 70% compared to on-demand pricing.

- Token Management: Strategies like prompt caching (storing common queries to avoid re-processing) and output limiting (capping the length of AI responses) are essential for controlling API bills.

- Tiered Inference: Routing simple queries to cheap models and complex queries to expensive models.

- Autoscaling for Inference: Dynamically scaling GPU/CPU resources based on inference demand is crucial to avoid over-provisioning and wasted spend.

- Model Compression Techniques: Quantization, pruning, and knowledge distillation reduce model size and complexity, lowering the compute needed for both training and inference. This is an AI-specific optimization.

- Architectural Heterogeneity. Modern cost-saving strategies don’t just use “custom chips”; they use CPU-based inference for small language models (SLMs). For many tasks, expensive GPUs are no longer the “primary cost differentiator” because optimizations like 4-bit quantization allow models to run on standard, much cheaper hardware.

- The “Small Model” Strategy: One of the biggest current cost-savers is using SLMs or DLMs (Domain Language Models) like Mistral or Phi-3 for specific tasks instead of “God-models” like GPT-4 or Claude 3.5 Sonnet.

- Model Fine-Tuning vs. RAG: While fine-tuning is often more expensive upfront it can be cheaper in the long run for specific, high-volume tasks because it reduces the “token count” needed in every prompt.

- The “Cost of Inaction”: Sometimes the most expensive strategy is not implementing AI in a high-value area, allowing competitors to gain a lead.

Portfolio Management & Landscape Data

Given the unique complexities and interdependencies of AI systems, a comprehensive Enterprise Portfolio Management practice, especially when enriched with landscape data, would provide a strong basis for managing or lowering costs.

Portfolio Management’s capabilities in business process modelling, application landscape mapping, and data flow diagrams are uniquely positioned to provide the necessary visibility. By integrating AI systems as components within these Portfolio Management artifacts, an organization can visualize:

- Which AI models support which critical business capabilities.

- The data flows feeding these critical AI models.

- The dependencies between mission-critical AI and other enterprise systems.

- Decommissioning (Zombie AI): Portfolio Management should include a “kill switch” for AI projects that fail to meet KPIs. Maintaining a model that provides no business value is a significant “silent” drain on resources.

- The human oversight points, or lack thereof, in critical AI-driven processes.

This provides a crucial basis for proactive risk assessment, targeted governance, and prioritized investment in robustness, explainability, and auditing for the most impactful AI deployments, thereby mitigating the potentially crippling costs of failure in mission-critical areas.

Portfolio Management provides the basis to reduce the costs of AI.

Holistic Visibility and Strategic Decision Making: Portfolio Management provides a single source of truth for all IT assets, applications, data, and business capabilities. This holistic view is crucial for identifying interdependencies, redundancies, and opportunities for optimization specific to AI. Armed with landscape data, Portfolio Management enables data-driven decisions on where to invest in AI, which AI use cases align with strategic goals, and how to best leverage existing resources.

Reduced Duplication/Redundancy and Improved Governance/Compliance:

By mapping existing data sources, infrastructure, and even pre-trained models, Portfolio Management prevents teams from re-creating assets or subscribing to redundant services. Portfolio Management ensures that AI initiatives adhere to enterprise standards, security policies, and regulatory requirements from the outset, avoiding costly rework or penalties later.

Optimized Resource Utilization and Accelerated Time-to-Value: Portfolio Management helps to “right-size” AI environments by understanding the demand and supply of compute, storage, and data, leading to more efficient resource allocation. A well-governed architectural landscape allows for faster identification of suitable data, existing infrastructure, and reusable components, accelerating AI project delivery and time-to-value.

Key cost management measures to take when using technologies such as AI are:

- Start with small, easily manageable pilot projects

- Cut where it doesn’t hurt the business strategy and required innovation

- Manage security and compliance

- Make smart decisions on internal vs external talent and skills

- Invest in the right infrastructure

Architectural Maturity: The “AI API Gateway”

Portfolio Management enables the creation of an AI API Gateway. Centralizing all AI calls through a single gateway allows an organization to:

- Enforce rate limiting to prevent runaway costs.

- Implement cost tracking by department or project.

- Switch providers easily if a competitor lowers their prices.

In essence, Portfolio Management acts as the strategic conductor that orchestrates the various elements of an AI ecosystem, ensuring they are aligned, optimized, and managed in a way that inherently drives down costs by fostering efficiency, reusability, and informed decision-making.

You May Also Like

Cashing In On University Patents Means Giving Up On Our Innovation Future

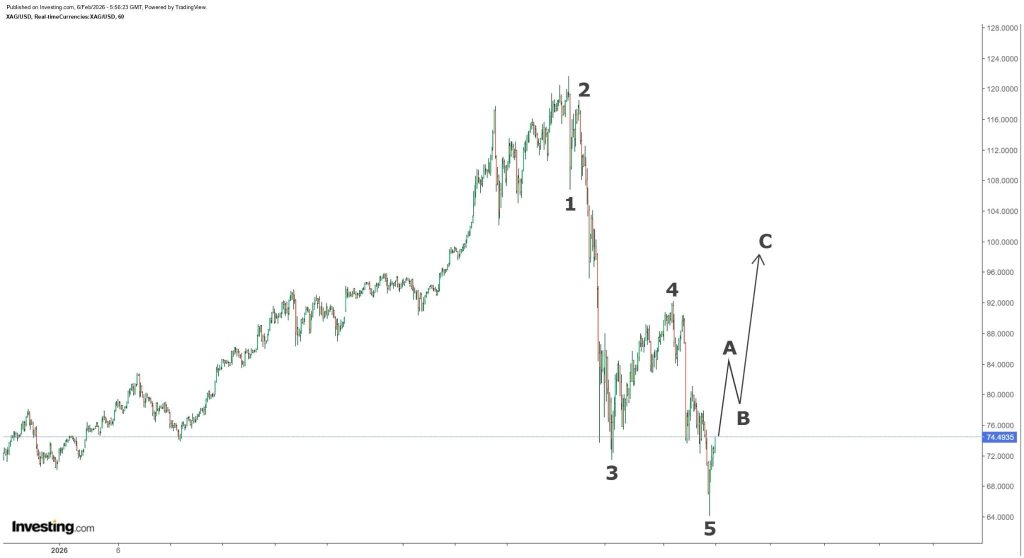

Silver Price Crash Is Over “For Real This Time,” Analyst Predicts a Surge Back Above $90